AI Test Case Generator - Free Online Tool | Generate Test Cases from Requirements

AI testing tools address the core bottleneck in modern QA: scaling test coverage without proportionally scaling your team. GPT-based solutions understand context from your requirements and existing test suites to generate relevant test scenarios in seconds rather than hours.

The practical impact shows up in three key areas:

- Coverage gaps disappear: AI systematically generates test cases for boundary conditions and error handling paths that your team typically deprioritizes due to time constraints.

- Maintenance burden drops: When your application changes, AI tools automatically identify which tests need updates. If you implement AI assistance, you’ll likely spend 70% less time on test maintenance.

- Knowledge transfer accelerates: Junior testers on your team can generate comprehensive test suites by describing functionality in plain language while the AI handles technical test structure.

Role of AI in code testing

AI-powered testing tools transform how you catch bugs through three proven capabilities. Model-based generators analyze your codebase structure to create comprehensive test scenarios automatically. These systems identify edge cases hiding in complex logic branches that your manual reviewers consistently miss.

Natural Language Processing tools scan requirement documents in real-time and flag ambiguities before they turn into costly development cycles. If your team starts using NLP-enhanced requirement analysis, you’ll be catching specification gaps 60% earlier than you would with traditional review processes.

Here are the main ways AI enhances code testing:

- Intelligent test generation: You’re looking at a game-changer here: AI test generation has moved way past basic code scanning. The smart systems now pull from your actual user flows and production logs to build tests that mirror real behaviour. Feeding your requirement docs directly into NLP-powered tools will be enough: they spit out ready-to-run test cases. Want immediate results? Start with one user journey – say, checkout flow – and let the AI generate variations you’d never think to test manually.

- Automated bug detection: AI-powered tools can analyse code and identify bugs, vulnerabilities, or performance bottlenecks. Based on machine learning techniques to learn patterns in codebases, these tools are capable of detecting both trivial and complex issues that may be missed by manual testing. Using AI in software testing helps you catch critical issues early in development, saving time and effort in debugging.

- Test optimisation: With AI, you can optimise testing processes by intelligently prioritising test cases based on factors like critical functionalities code coverage and historical data on defects. This allows you to focus your testing efforts on the most crucial areas, saving time and resources while maintaining comprehensive testing practices.

- Intelligent test result analysis: Using AI tools for QA testing can also help you analyse test results, identify patterns, and provide insights into issues. By understanding the root causes of failed tests, you can quickly pinpoint and resolve problems, leading to faster debugging and more efficient code improvements.

- Predictive maintenance: Based on historical data, system logs, and performance metrics, AI can predict potential code failures or vulnerability areas. You can mitigate risks, enhance code stability, and improve overall software reliability by continuously identifying the areas that require your attention.

I'm using AI to generate and improve manual test cases so I don't have to write down everything myself.

And what could be a better solution than aqua cloud when it comes to integrating AI into your testing processes? With aqua, you can generate test cases from requirements in a matter of seconds. Or you can say a word and translate your ideas into comprehensive documents within seconds. Also, you will save valuable time using our AI capabilities to fill test coverage gaps efficiently. aqua gives you a chance to keep all your tests in one place, promoting synergy and streamlining your workflow. With aqua’s AI-driven features, you can also prioritise tests effectively and track improvements effortlessly. So what’s keeping you from turning your testing efforts into a walk in the park?

Generate comprehensive, AI-powered test cases with one click with aqua

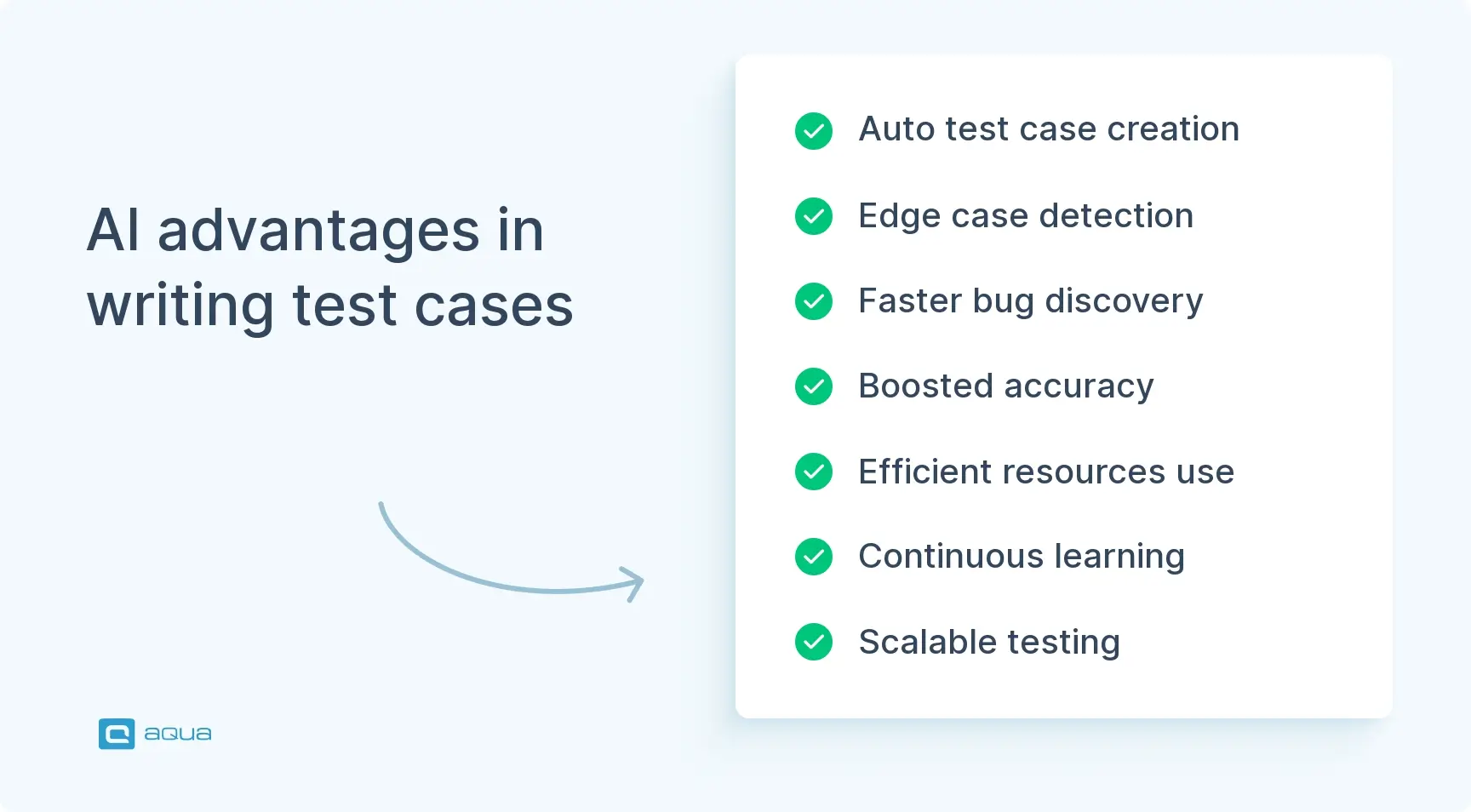

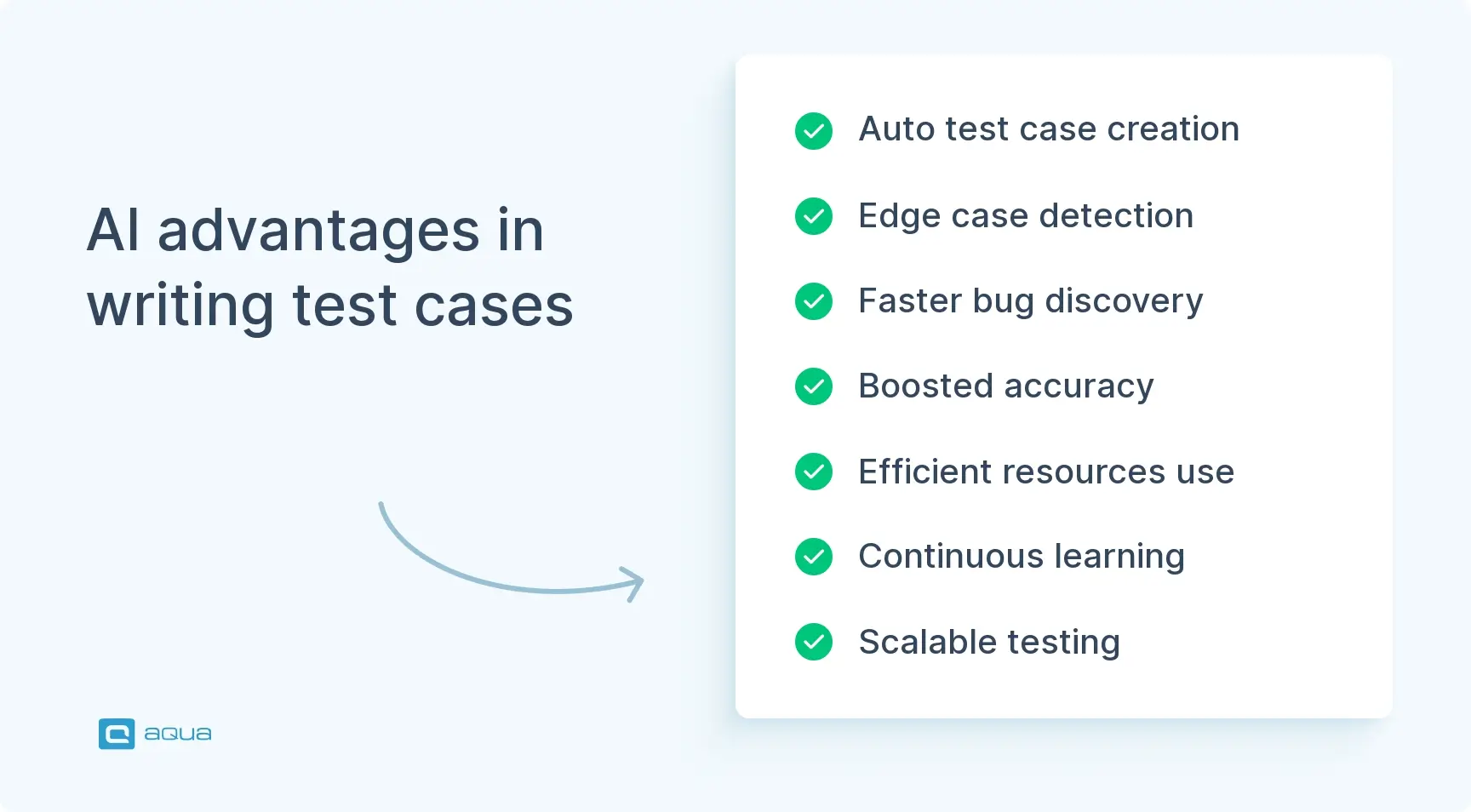

Benefits of using Artificial Intelligence for writing tests

Using AI to write test cases has many benefits that can significantly enhance the testing process. Here are some key advantages:

- Test case generation: AI algorithms are crucial in automatically generating test cases to quickly cover new or changed requirements and exploring edge cases that would be impractical to cover manually. AI makes it feasible to examine different software aspects thoroughly, ensuring comprehensive test coverage.

- Efficient exploration of software behaviours: AI algorithms can analyse code and system behaviour, enabling them to create test cases that explore various scenarios beyond what manual testers may have considered. By expanding the scope of testing, AI helps identify potential issues and vulnerabilities that might be overlooked in traditional testing processes.

- Improved edge case coverage: AI-powered testing allows you to identify and test edge cases you might miss in manual testing due to their complexity or rarity. These edge cases could lead to critical issues in real-world scenarios, making AI-generated test cases crucial in achieving more comprehensive testing.

- Enhanced accuracy and efficiency: Generating and executing tests with AI can greatly improve testing accuracy and efficiency. It saves time and reduces the possibility of human errors, ensuring more reliable and precise test results.

- Faster bug detection and debugging: AI-powered testing tools can identify potential bugs and vulnerabilities in code. Early bug detection enables addressing them promptly, leading to faster debugging and more efficient code improvements.

- Optimal resource utilisation: AI can also optimise resource allocation during testing. By intelligently prioritising test cases, AI enables developers to focus even their manual testing efforts on the most critical issues. While devs ideally execute most unit tests to save everyone a headache, they can also save time and money by using AI to write unit tests for the less critical functionality.

- Continuous learning: AI algorithms can learn and adapt over time by refining their test generation strategies and adapting to evolving software requirements. This allows AI-powered testing systems to continuously improve their effectiveness and adapt to changes in the software.

- Scalability and reproducibility: AI-driven testing can easily scale to handle large and complex software systems by efficiently generating and executing many test cases. It helps accommodate the demands of complex software architectures. Additionally, you can reproduce AI-driven tests consistently, executing the same test scenarios repeatedly with the same expected outcomes.

In short, leveraging AI to write unit tests empowers developers to improve test coverage, enhance accuracy and efficiency, and optimise resource utilisation. AI solutions’ scale and pattern recognition lower the entry bar than regular test automation.

"If you automate a mess, you get an automated mess."

Rod Michael, Directory of Rockwell Automation

How AI Tackles Edge Cases and Improves Coverage

AI has transformed how teams handle those tricky edge cases that slip through manual testing, and it’s pretty impressive what you can accomplish. Think generative AI, model-based exploration, and adversarial testing. These approaches let you systematically generate weird boundary-value inputs, poke around less common user paths, and catch bugs hiding in the corners.

Try intelligent fuzzing on your most critical functions first. AI-powered mutation testing can throw unexpected data at your code and spot vulnerabilities that’d take weeks to find manually.

Your test coverage expands significantly, but more importantly, you’ll sleep better knowing your software won’t crash when users do something you didn’t anticipate. Start with your payment processing or authentication modules; that’s where edge case failures hurt the most.

Context-Aware Test Maintenance: How AI Reduces Test Fragility

Your test suite breaks every time developers refactor code, even when functionality stays identical. Traditional automated tests rely on brittle locators. Change a CSS class from “login-button” to “auth-btn” and dozens of your tests fail despite the button working perfectly.

AI-powered test maintenance solves this by understanding context rather than just matching selectors. The system learns multiple identification strategies for each UI element simultaneously and understands element relationships. When elements change location but retain function, the AI automatically updates test locators for you.

Key capabilities of context-aware AI testing:

- Learns multiple identification strategies for each element

- Understands element relationships such as button position relative to input fields

- Recognizes visual patterns like shape and color as backup identifiers

- Automatically updates test locators when elements move but function remains

Start by auditing your most fragile test scenarios, the ones that break with every UI update. Migrate these to AI-powered frameworks using multi-factor element identification. If your team makes this shift, you’ll probably see test maintenance time reduced by 60-80% and false failure rates dropping from 25% to under 5%.

The AI learns from every test execution. When a test fails, the system analyzes whether the failure stems from actual functionality breaking or simply element identification issues. Run your existing tests for 2-3 weeks while the AI learns your application patterns, then migrate gradually starting with your highest-maintenance scenarios.

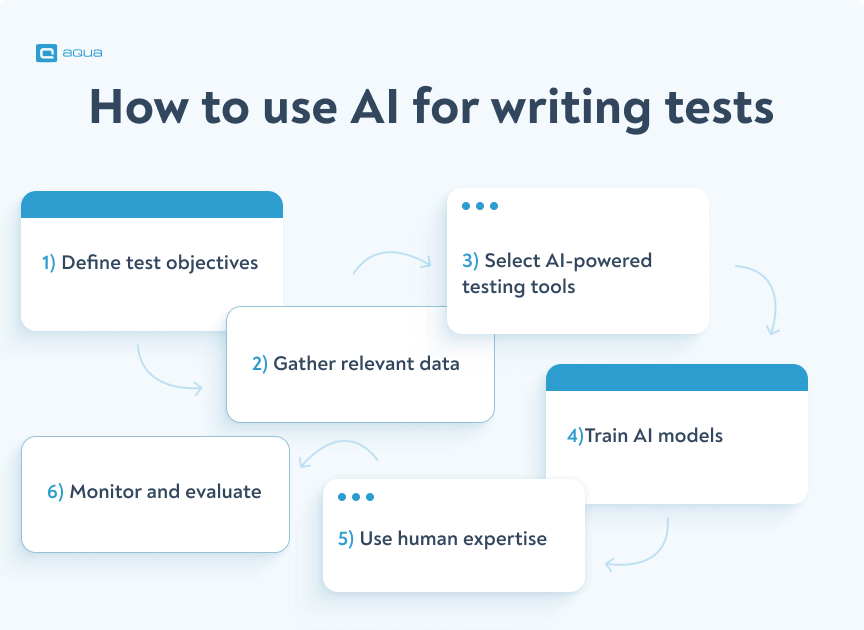

How to use AI for writing tests

To effectively use AI for writing tests, follow these steps:

- Define test objectives and determine the specific software aspects you want to test, the desired test coverage, and the quality goals.

- Gather relevant data, including codebases, system behaviour, historical test results, and any available documentation. This data will be the foundation for training and guiding AI algorithms.

- Select AI-powered testing tools or frameworks that align with your testing requirements. You will find solutions with one or multiple ways to apply AI, including intelligent test generation tools and automated bug detection systems. When choosing, you should consider factors like compatibility with your development environment, ease of integration, and, ideally your future needs.

- Train AI models (if required) using the gathered data, including code samples, test cases, and desired outcomes. This will help the AI algorithms learn patterns and behaviours within the codebase and contribute to a more effective test generation. These days, cutting-edge tools do not require you to do any training yourself: they just learn from the data in your workspace and/or come pretrained.

- Use human expertise and combine the strengths of AI-driven testing with human insights to enhance test quality further, prioritise test cases, and interpret complex scenarios.

- Monitor and evaluate the effectiveness of AI-driven testing by tracking metrics like test coverage, bug detection rates, and time saved. Assess the impact of AI on your testing process using this feedback and make necessary adjustments to improve its effectiveness.

If I can’t find a quick answer to a coding problem on stack overflow, I ask chatgpt. So, basically, I use AI as a search engine in testing.

Best AI tools to write test cases

Now that we have covered the benefits and strategies for generating test cases with AI, it is time to give you a list of tools that will help you master the AI-powered test creation. Here are the best ones:

1. aqua cloud: aqua is a robust AI tool for test case generation, excelling in fast test case generation that takes only a few seconds from requirements. With aqua, you can aster test case management, getting your tests ready from a simple voice prompt or written requirement. The process saves you at least 90% of your time when compared to manual test case generation. Also, aqua cloud is the first tool that used the power of AI in QA industry, and has much more potential as a bug tracking, test management, or QA management tool, including requirements management. aqua’s AI-driven approach ensures efficiency and accuracy, making it a top choice for teams looking to write test cases using AI.

2. Testim: Testim is known for its AI-generated test cases, which streamline the testing process. However, it can be complex to set up for beginners.

3. Functionize: Functionize provides a comprehensive AI tool for test case creation, offering advanced features. Its con is the high cost, which might not be suitable for smaller teams.

4: Mabl: Mabl offers AI tools for test case generation with easy integration. A drawback is its limited customisation options for advanced users.

5: Applitools: Applitools focuses on visual AI testing, providing AI-generated test cases. However, it primarily targets visual validations, which might limit broader test case scenarios.

Best Practices and Challenges When Using AI for Testing

AI-powered testing tools can nearly double your testing efficiency – but only if you avoid the common traps. You need to pair AI test generation with human oversight, especially for your business-critical flows. Let AI handle the repetitive scenarios while you focus on edge cases and user experience testing.

Start simple – pick one stable feature and run AI-generated tests alongside your existing suite for two weeks. Track how many real bugs each approach catches. You’ll quickly see where AI shines and where it falls short.

The biggest gotcha? Garbage data equals garbage tests. Your AI tool is only as good as the requirements and historical data you feed it. Also, resist the urge to automate everything immediately – some AI models work like black boxes, making it tough to debug when tests fail.

Keep feeding real-world results back into your AI system. This creates a feedback loop that gets smarter over time. The goal isn’t to replace human testers but to free them up for the creative problem-solving that machines can’t handle yet.

Measuring ROI: Quantifying AI Testing Impact on Your Team

You’ve implemented AI testing tools. Now prove the investment paid off. Before you start measuring ROI, you need baseline numbers from before implementation.

Pull your last 20 user stories and sum up the testing hours your team spent writing test cases. Divide by 20 to get your average per story. Next, calculate maintenance hours per sprint from your time tracking tool. You’ll probably find the real figure sits between 15-25 hours per two-week sprint. Finally, count production bugs over the past six months and multiply by your average defect cost, typically 3.000-8.000€ including debugging time and customer impact.

Step-by-step ROI calculation:

- Time savings: Take baseline monthly hours × hourly rate for old cost. Compare to current monthly hours × hourly rate for new cost. Example: 320 hours at 65€/hour = 20.800€. After AI, 180 hours = 11.700€. Annual savings: 109.200€ minus 25.000€ tool cost = 84.200€ net ROI.

- Quality gains: Production defects before AI (23 bugs) minus after (9 bugs) = 14 prevented bugs × 5.000€ average cost = 70.000€ saved.

- Velocity impact: Features shipped per quarter before (12) versus after (17) = 5 additional features. For SaaS at 50.000€ per customer, 3 new wins = 150.000€ annual recurring revenue.

Set up a simple spreadsheet and update it weekly showing time trending down and defects declining. Most teams see positive ROI within 90 days, accelerating in months 4-6 as AI learns your codebase. The hidden value shows up when senior testers shift from 20% strategic work to 60%, moving from fixing broken tests to exploratory testing.

Conclusion

Integrating AI in writing tests will inevitably revolutionise the software testing field. You can unlock many benefits by leveraging AI algorithms and techniques, including improved test coverage, accuracy, and efficiency. You can identify and address issues faster and more precisely with AI-powered test generation, automated bug detection, and intelligent test results. Using AI to write tests empowers you to deliver high-quality software while saving time, energy, and resources.

You need a modern QA testing solution to perfectly integrate AI into your testing processes. We suggest you try aqua, a cutting-edge, AI-driven test management solution designed to streamline your testing process, saving you time and effort by automating manual and repetitive tasks. With its powerful AI capabilities, aqua generates test cases from scratch, identifies potential bugs, and optimises your testing workflow. This is like ChatGPT, except QA-tuned, secure, and understands the context of your project.

Let AI take care of the repetitive testing efforts for you